Summary: Tomography means reconstructing the internal structure of a physical body using X-ray images of the body taken from different directions. Mathematically, the problem is to recover a non-negative function f(x) from a collection of line integrals of f. Here we show how to solve the tomography problem using truncated singular value decomposition (TSVD), a basic reconstruction method for linear ill-posed inverse problems. We keep things simple by working at a low resolution (50×50 tomographic images) so that we can actually build the system matrix A and compute the singular value decomposition (SVD) of A.

Author: Samuli Siltanen.

Software: Matlab (probably works also with Octave).

Download package as zip file: SparseTomoTSVD

Literature: Jennifer L Mueller and Samuli Siltanen: Linear and nonlinear inverse problems with practical applications, SIAM 2012. Below we refer to this book by [MS2012].

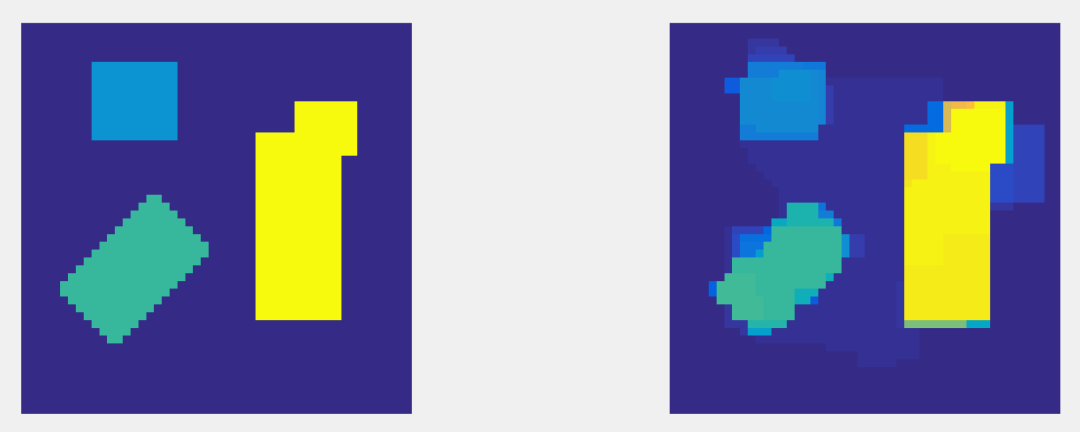

We start by designing a digital test phantom. It consists of four rectangles with various X-ray attenuation coefficients. The attenuation is constant inside each rectangle and zero outside the rectangles. The Matlab routine RectanglePhantom.m constructs the phantom at the resolution MxM; the number M is given as argument. The phantom is so designed that if you increase resolution by taking a larger M, the resulting pixel image gives a more accurate approximation of the same piecewise constant function defined in the unit square.

You can take a look at the 50×50 phantom by running the routine RectanglePhantom_plot.m. It looks like this:

Next we construct the measurement matrix A for the linear tomographic model m=Af. Here the vertical vector f contains the pixel values of the unknown image numbered column-wise according to the Matlab standard (going from image to vertical vector in Matlab: f=f(:);). Since the phantom has size 50×50 = 2500, the matrix A has 2500 columns.

We consider 15 tomographic projection directions distributed with equal steps over 360 degrees.

We construct A in the following “brute-force” way:

- First, we create a 50×50 zero matrix and insert value “1” into pixel number one. Then we apply Matlab’s radon.m routine that simulates X-ray data of the image. We drop the resulting sinogram into a vertical vector and insert it as the first column of A.

- Second, we again create a 50×50 zero matrix and insert value “1” into pixel number two. This leads to the second column of A.

- We continue until the last column of A is constructed.

What is the number of rows in matrix A, or equivalently, the dimension of the measurement vector m? The answer comes from the internal workings of the “radon.m” routine in Matlab. For a 50×50 image, one projection direction has 75 data points. Since we have 15 projection directions, the length of the measurement vector m is 15*75=1125.

The Matlab routine for constructing the matrix A is called tomo1_RadonMatrix_comp.m. It also computes the SVD of A in the form A=UDV^T, where U and V are orthonormal matrices and D is a diagonal matrix with nonnegative singular values located along the diagonal ordered from the largest to the smallest. The matrices A, U, V and D saved into the file RadonMatrix_50_15.mat.

You can take a look at the matrix using tomo1_RadonMatrix_plot.m. In this image you see the nonzero elements of the 1125×2500 matrix A marked as blue dots:

Now it is time to simulate tomographic data. The simplest way to do this in Matlab would be to write f=RectanglePhantom(50) and then drop f to vertical vector form with the command f=f(:). Then we could simulate data by writing m=A*f. However, this approach commits the so-called inverse crime, where the same measurement model is used both for data simulation and for reconstruction. Inverse crime leads to unrealistically good reconstructions and therefore yields unreliable results.

To avoid inverse crime, we introduce some modelling error to the data. We actually create a higher-resolution phantom f2=RectanglePhantom(100), simulate data form that, and downsample the data so that it corresponds to that of a 50×50 target. All of this is implemented in the file tomo2_NoCrimeData_comp.m. There you can also add your favourite amount of simulated white noise to the data by changing the parameter “noiselevel.”

The noisy 75×15 sinogram is called mncn, where m stands for “measurement,” nc for “no crime,” and n for “noisy,” and saved to file tomo2_NoCrime_50_15.mat along with other useful variables.

Running tomo2_NoCrimeData_plot(50,15) gives you a plot comparing the inverse-crime and no-inverse-crime sinograms:

Exercise 1.

Try what happens with Moore-Penrose pseudoinverse when applied to the ill-posed problem of tomography. Write first

>> MPrecon = A\mncn(:);

to compute the minimum norm solution, and then convert the result to image form by

>> MPrecon = reshape(MPrecon,50,50);

Then write

>> imagesc([RectanglePhantom(50),MPrecon])

and see if the result looks like the original phantom. If the picture looks strange, you can restrict the colour range by writing instead

>> imagesc([RectanglePhantom(50),MPrecon],[0,1])

Discuss what you see.

Exercise 2.

Add non-negativity constraint to the minimum norm solution approach. Write

>> MPrecon_nn = lsqnonneg(A,mncn(:));

and then continue as in Exercise 1. Is the non-negative reconstruction better than the one in Exercise 1?

The above Exercises demonstrated that the solution of ill-posed problems require regularized solution techniques, such as TSVD. For the theory behind TSVD, see Chapter 4 of [MS2012]. This is the formula for TSVD:

The idea of TSVD is to use only r_alpha first singular values in the reconstruction. Here you can see a plot of the singular values of the matrix A:

So it seems that around the index 800 the singular values start to get smaller, and somewhere near index 900 there is a sudden drop in the size of singular values. It is the huge size difference between first and last singular values that causes the instability in the least-squares inversion discussed above.

We first try TSVD reconstruction with 20 largest singular values. This is done by running the routine tomo3_TSVD_comp.m with the parameter value r_alpha = 20. The reconstruction looks like this:

We can use more than 20 singular values. This is the result of TSVD algorithm using 100 largest singular values (with the parameter value r_alpha = 100):

However, if we use too many singular values, the reconstruction breaks down. Here is an example with 900 singular values:

Exercise 3:

Write a for-loop where the number r_alpha ranges from 1 to 1125 (r_alpha is the number of largest singular values used in TSVD reconstruction). For each value of r_alpha, calculate the relative error in the reconstruction using the Matlab commands

>> orig = RectanglePhantom(50);

>> rel_error = (norm(recon(:)-orig(:))/norm(orig(:));

The computation of the reconstruction error is possible since in this simulated example we know the ground truth. In practical imaging we do not have that luxury. What is the number of singular values that gives the minimal relative error? Plot the singular values of A and discuss the location where the reconstruction error is minimal.

Exercise 4:

Columns of the matrix V are called singular vectors. They are the building blocks of any reconstruction using TSVD (why?). Here is how to plot the first singular vector as an image:

>> imagesc(reshape(V(:,1),32,32));

Plot a series of singular vectors in this way, for example columns 1, 100, 200, 300, …, of V. Discuss the change in the appearance of the singular vectors as you go on with the process. What does the change mean for the TSVD reconstructions?

Bonus material: Tikhonov regularization

Next we move beyond truncated SVD. Tikhonov regularization is a classical reconstruction methodology for ill-posed inverse problems. See the Wikipedia page and Chapter 5 of [MS2012]. One method for computing Tikhonov regularized solutions is filtering the singular values:

Exercise 5:

make a copy of the file tomo3_TSVD_comp.m and call it tomo4_Tikhonov_comp.m. Modify the file so that the resulting reconstruction is Tikhonov regularized and done with the above filtering of the singular values. Also, create a file tomo4_Tikhonov_plot.m that plots the reconstruction.

Exercise 6:

Compute Tikhonov regularized solutions with a variety of values of the regularization parameter alpha. Which value of alpha gives the lowest relative error in the reconstruction? Is the relative error smaller or larger than the best one you got with TSVD?

Exercise 7 (advanced):

Make a video showing the Tikhonov regularized reconstructions changing when alpha ranges from very small values (minimum for example 0.0000001) to really large values (maximum for example 10000000). Make sure that each frame has the same colormap so that the values are comparable from frame to frame.

Further reading: Total Variation regularization for X-ray tomography.

Could you tell me that ‘Chapter 4 of [MS2012]’ in your blog is from which book?

I am going to use TSVD for study, thank you.

The book is this: Mueller J L and Siltanen S, “Linear and Nonlinear Inverse Problems with Practical Applications.” SIAM 2012.

What is the meaning of tomography?

It is “slice imaging” for historical reasons. When tomography was developed by COrmack and Hounsfield in the 1960’s, they considered imaging a 3D target one 2D slice at a time. Then stacking those 2D reconstructions like a deck of cards will constitute the 3D object.

Thank you for the great post.

I have a question about Exercise 4: I’ve plotted the singular vectors for columns c=1:30:300, it seems to me as c increases, the singular vectors become more and more randomly distributed. But how to explain this?